I’ve been speaking on integrating Python and SQL Server for a while now, and having given the same presentation at nearly a dozen SQL Saturdays, PASS Summit, and multiple user groups, it’s about time to retire my favourite session. Just in case there’s anyone out there who is interested in the subject but hasn’t managed to see it yet, I thought I’d start sharing some of the code and use-cases in a series of blog posts.

We’ll start off with a simple step-by-step introduction to the sp_execute_external_script stored procedure. This is the glue that enables us to integrate SQL Server with the Python engine by sending the output of a T-SQL query over to Python and getting a result set back. . For example, we could develop a stored procedure to be used as a data set in an SSRS report that returns statistical data produced by a Python library such as SciPy. In this post we’ll introduce the procedure and how to pass a simple data set into and back out of Python, and we’ll get into manipulating that data in a future post.

If you’d like to follow along with me, you’ll need to make sure that you’re running SQL Server 2017 or later, and that Machine Learning Services has been installed with the Python option checked. Don’t worry if you installed both R and Python, as they play quite nicely together. The SQL Server Launchpad service should be running, and you should make sure that the ability to execute the sp_execute_external_script procedure is enabled by running the following code:

-- Introduction To sp_execute_external_script With Python - Query 1.00

-- Enabling sp_execute_external_script.

EXEC sp_configure 'external scripts enabled', 1;

RECONFIGURE;

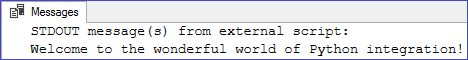

As with any introduction to a new language, we’re contractually obligated to kick things off with a “Hello, world!”-style script. You’ll note that our first procedure call below has only two parameters. The @language parameter says that we’ll be using Python code as opposed to R, and that the Launchpad service should start instantiating the Python engine. The @script parameter then contains the Python code that we’ll be executing, which in this case is a simple print statement:

-- Introduction To sp_execute_external_script With Python - Query 1.01

-- Calling the procedure with no input or output.

EXEC sp_execute_external_script

@language = N'Python',

@script = N'print("Welcome to the wonderful world of Python integration!")';

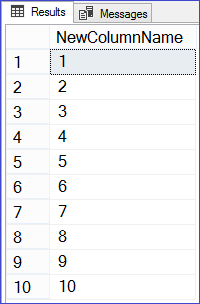

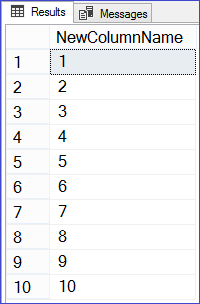

Now that we know our Python integration is working, the next step is to send some data over from SQL Server and get it back out. We specify a T-SQL query to run in the @input_data_1 parameter; the SQL engine will execute this query and send the resulting data set over to Python. The @script parameter has now been updated to simply say that we will take that data, identified by its default name of InputDataSet, copy it over to OutputDataSet (another default) and then return it to SQL Server. I’ve got a tally table (a table of sequential numbers) set up in my Admin database, and I’ll begin by just sending across the first ten numbers from that table:

-- Introduction To sp_execute_external_script With Python - Query 1.02

-- Passing the top ten numbers from our tally table into Python

-- and getting them right back out.

USE Admin;

GO

EXEC sp_execute_external_script

@language = N'Python',

@script = N'OutputDataSet = InputDataSet',

@input_data_1 = N'SELECT TOP 10 * FROM dbo.Tally;'

WITH RESULT SETS

((

NewColumnName INT NOT NULL

));

If for some reason you don’t have or are not able to add a tally table to your system, you can use the ROW_NUMBER() function against the sys.objects DMO instead:

-- Introduction To sp_execute_external_script With Python - Query 1.02b

-- Using sys.objects instead of a tally table.

EXEC sp_execute_external_script

@language = N'Python',

@script = N'OutputDataSet = InputDataSet',

@input_data_1 = N'SELECT TOP 10 ROW_NUMBER() OVER (ORDER BY name) FROM sys.objects;'

WITH RESULT SETS

((

NewColumnName INT NOT NULL

));

There’s no need to keep the default name of “InputDataSet” for our input data set. I’m quite partial to “myQuery” myself. Renaming it is as simple as adding another parameter called @input_data_1_name. Of course, we have to remember to update the python code in @script to reflect the new name:

-- Introduction To sp_execute_external_script With Python - Query 1.03

-- Using a custom name for the input data.

USE Admin;

GO

EXEC sp_execute_external_script

@language = N'Python',

@script = N'OutputDataSet = myQuery',

@input_data_1 = N'SELECT TOP 10 * FROM dbo.Tally;',

@input_data_1_name = N'myQuery'

WITH RESULT SETS

((

NewColumnName INT NOT NULL

));

Unsurprisingly, we can also change the name of our output data set by adding the @output_data_1 parameter, and updating @script accordingly:

-- Introduction To sp_execute_external_script With Python - Query 1.04

-- Using custom names for both the input and output data.

USE Admin;

GO

EXEC sp_execute_external_script

@language = N'Python',

@script = N'Results = myQuery',

@input_data_1 = N'SELECT TOP 10 * FROM dbo.Tally;',

@input_data_1_name = N'myQuery',

@output_data_1_name = N'Results'

WITH RESULT SETS

((

NewColumnName INT NOT NULL

));

I should pause here to point out that although having parameters named @input_data_1, @input_data_1_name, and @output_data_1_name may imply that we can manage multiple input and output data sets with sp_execute_external_script, that is sadly not the case. I’m hoping that this naming convention was chosen to allow room for that to happen in a future release (hint, hint, product team!)

Although this input query is very simple, in the real world we might be sending over the output of some very complex queries. The input query in one of the use cases in the session I’ve been presenting is over 150 lines! To make this more manageable in our integration, rather than embedding the whole query in our procedure call and making an unholy mess, we’ll instead store it in a variable called @InputQuery and pass that in to @input_data_1. This allows us to use our standard T-SQL coding style, and has the added effect of being easy to copy-and-paste into a new query window for development and/or, heaven-forbid, debugging.

-- Introduction To sp_execute_external_script With Python - Query 1.05

-- Variablizing the input data query.

USE Admin;

GO

DECLARE @InputQuery NVARCHAR(500);

SET @InputQuery = N'

SELECT TOP 10

SequenceNumber

FROM

dbo.Tally;

';

EXEC sp_execute_external_script

@language = N'Python',

@script = N'Results = myQuery',

@input_data_1 = @InputQuery,

@input_data_1_name = N'myQuery',

@output_data_1_name = N'Results'

WITH RESULT SETS

((

NewColumnName INT NOT NULL

));

(Yes, “variablizing” is a real word. I checked.)

We can do the same thing with our Python code, which in this example we’ve stored in a variable named @PyScript and then passed in to the @script parameter:

-- Introduction To sp_execute_external_script With Python - Query 1.06

-- Variablizing both the input data query and the Python script.

USE Admin;

GO

DECLARE

@InputQuery NVARCHAR(500),

@PyScript NVARCHAR(500);

SET @InputQuery = N'

SELECT TOP 10

SequenceNumber

FROM

Admin.dbo.Tally;

';

SET @PyScript = N'

Results = myQuery

';

EXEC sp_execute_external_script

@language = N'Python',

@script = @PyScript,

@input_data_1 = @InputQuery,

@input_data_1_name = N'myQuery',

@output_data_1_name = N'Results'

WITH RESULT SETS

((

NewColumnName INT NOT NULL

));

Look at that nice clean procedure call! Marvel at how the business logic in our T-SQL query and our Python code is separated out, formatted nicely, and ready to copy into another window or IDE! Can we all now agree to use this format for our sp_execute_external_script calls going forward?

This concludes the end of part one, but we’ll return with part two very soon, in which we start to manipulate our data set in Python and see what happens when things don’t go so well…